Top 17 Trending Python Projects on GitHub

January 2026 trending Python repos

The Python ecosystem this month is dominated by Claude Skills and AI agent tooling. This overview analyzes the top trending Python repositories on GitHub.

January 2026 trending Python repos

The Python ecosystem this month is dominated by Claude Skills and AI agent tooling. This overview analyzes the top trending Python repositories on GitHub.

January 2026 trending Rust repos

The Rust ecosystem is exploding with innovative projects, particularly in AI coding tools and terminal applications. This overview analyzes the top trending Rust repositories on GitHub this month.

January 2026 trending Go repos

The Go ecosystem continues to thrive with innovative projects spanning AI tooling, self-hosted applications, and developer infrastructure. This overview analyzes the top trending Go repositories on GitHub this month.

Real AUD pricing from Aussie retailers now

The NVIDIA DGX Spark (GB10 Grace Blackwell) is now available in Australia at major PC retailers with local stock. If you’ve been following the global DGX Spark pricing and availability, you’ll be interested to know that Australian pricing ranges from $6,249 to $7,999 AUD depending on storage configuration and retailer.

Cut LLM costs by 80% with smart token optimization

Token optimization is the critical skill separating cost-effective LLM applications from budget-draining experiments.

Build MCP servers for AI assistants with Python examples

The Model Context Protocol (MCP) is revolutionizing how AI assistants interact with external data sources and tools. In this guide, we’ll explore how to build MCP servers in Python, with examples focused on web search and scraping capabilities.

Availability, real-world retail pricing across six countries, and comparison against Mac Studio.

NVIDIA DGX Spark is real, on sale Oct 15, 2025, and targeted at CUDA developers needing local LLM work with an integrated NVIDIA AI stack. US MSRP $3,999; UK/DE/JP retail is higher due to VAT and channel. AUD/KRW public sticker prices are not yet widely posted.

Integrate Ollama with Go: SDK guide, examples, and production best practices.

This guide provides a comprehensive overview of available Go SDKs for Ollama and compares their feature sets.

Comparing Speed, parameters and performance of these two models

Here is a comparison between Qwen3:30b and GPT-OSS:20b focusing on instruction following and performance parameters, specs and speed:

+ Specific Examples Using Thinking LLMs

In this post, we’ll explore two ways to connect your Python application to Ollama: 1. Via HTTP REST API; 2. Via the official Ollama Python library.

Not very nice.

Ollama’s GPT-OSS models have recurring issues handling structured output, especially when used with frameworks like LangChain, OpenAI SDK, vllm, and others.

Slightly different APIs require special approach.

Here’s a side-by-side support comparison of structured output (getting reliable JSON back) across popular LLM providers, plus minimal Python examples

A couple of ways to get structured output from Ollama

Large Language Models (LLMs) are powerful, but in production we rarely want free-form paragraphs. Instead, we want predictable data: attributes, facts, or structured objects you can feed into an app. That’s LLM Structured Output.

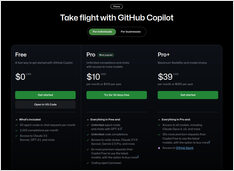

Description, plans commands list and keyboard shortcuts

Here is an up-to-date GitHub Copilot cheat sheet, covering essential shortcuts, commands, usage tips, and context features for Visual Studio Code and Copilot Chat

Longread about MCP scpecs and implementation in GO

Here we have a description of The Model Context Protocol (MCP), short notes on how to implement an MCP server in Go, including message structure, protocol specifications.

Implementing RAG? Here are some Go code bits - 2...

Since standard Ollama doesn’t have a direct rerank API, you’ll need to implement reranking using Qwen3 Reranker in GO by generating embeddings for query-document pairs and scoring them.