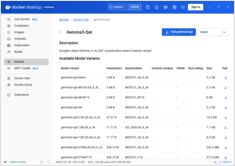

Local LLM Hosting: Complete 2026 Guide - Ollama, vLLM, LocalAI, Jan, LM Studio & More

Master local LLM deployment with 12+ tools compared

Local deployment of LLMs has become increasingly popular as developers and organizations seek enhanced privacy, reduced latency, and greater control over their AI infrastructure.